|

in most

light conditions, except for outdoor light,

because of the high infrared output of the sun. Additionally,

the cameras triangulated the position of the user much more efficiently

than the ultrasonic system primarily because the speed of light

is substantially faster than the speed of sound. Finally, whereas

the sonar speakers sent signals in a more serial fashion, with

the infrared system the cameras took images simultaneously. A

new graphic version of the software, called Flashtrack, developed

for the infrared system, was written primarily by Badger.

Around the same time as the development

of the infrared system, APR received a CANARIE grant to develop

a networked version of the tracking system. This networked version

of the GAMS system is the current iteration in use and the focus

of the rest of this paper.

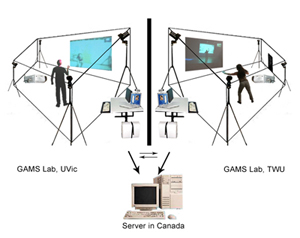

The networked GAMS system is

configured so that it uses a Control Centre to manage information

from one remote site to another. Positional data is passed to

the Control Centre and, then, onto the remote site so that a

remote user can be virtually present and control media over the

Internet. The positional data is passed on via UDP, an earlier

network protocol than TCPIP. UDP does not include error correction

and is, therefore, more effective for real-time media control.

In essence, the x, y, z positions comprising the 3D grid and

the speed of movement, or velocity, of the user are transmitted

to the Control Centre. The Control Centre can, then, pass this

positional and velocity information on to the remote site. Additionally,

the Control Centre can manage several "Universes" that

define who the remote users are, meaning a number of locations

can utilize the system at the same time.

This version resulted in a significant

shift in the way interaction could be enacted and the first instance

of telepresence using this system. Now, multiple users could

ostensibly interact together from different locations in their

own spaces and "see" one another in the system. More

importantly for this study, if one user moved her body and triggered

a robotic light in her space, that movement could occur at approximately

the exact time in the remote location, thus activating the robot

light telepresently. (Fig. 5)

Figure 5. The networked system

|

Programming the space on a 3D

grid with this version of the system meant that media elements

could be placed in particular locations on that grid, essentially

creating zones where certain sounds, images, text, or any media

element could be found. Thus, when a performer enters a zone

where musical notes have been programmed, for example, these

notes are played as the performer passes her hand through that

zone. Performers, in this way, can play notes in any way they

wish, depending on the placement of the trackers. But not only

can the audience hear the notes played, but this information

is passed via the system over a high-speed internet connection

to the remote performer in his lab. The first art piece to use

the network version of tracking system was Virtual DJ by

Gibson, who gave a remote, networked performance from his location

in Victoria to another in Montréal in late 2002 for the

CANARIE conference. Running on a research network, the system

had a minimal amount of latency, around 70 ms., allowing for

relatively transparent interaction. Another remote version of

Virtual DJ was also performed at the NEXT 2.0 conference

between the University of Victoria and Karlstad, Sweden in May

2003. The latency in this performance was slightly more noticeable,

150-200 ms., because of the distance between the two locations.

In Fall 2004 a Canada Foundation

for Innovation grant awarded to Gibson made it possible for him,

working along with Bauer, to research ways to create behaviors

for the control of mediasuch as video, animation, and

imagesvia the Flash Track software and the Control

Centre. This work made it possible for positional information

to be sent from Flash Track to the Control Centre and, then,

onto a third computer running Macromedia Director and the behaviors

to allow the user to match locations in the room with videos

and images in Director and to manipulate these images files with

user movement.

The first piece to use this technology was When Ghosts Will

Die by Gibson and Dene Grigar, a performance-installation

that debuted in Dallas, Texas in April 2005 as a work-in-progress.

This experiment with interacting with media elements was repeated

with a networked performance of Virtual DJ, originally

a non-networked piece, by Gibson and Grigar in the summer 2005.

For that performance Gibson, working from his studio in Victoria,

BC, moved robotic lights and produced sound in Grigar's lab in

Denton, Texas while she simultaneously did the same in his from

her space. Documented on video, the work clearly demonstrates

the notion of embodied telepresence. The addition of webcam technology,

notably iSight and iChat, provided visual representation of each

remote performer in each local space, enhancing the sense of

performing together.

Since September 2005 recent changes

to the GAMS system include tagless tracking and voice activation

interaction, innovations that free the user from trackers or

wearables and facilitate the use of spoken word for commanding

behaviors. Bauer's Life Tastes Good, which debuted at

the Collisions conference in September 2005 at Victoria, Canada,

is the first art piece that utilized this version of GAMS. Because

no mediating device is needed between users and sensors, the

interaction is a direct relationship between human bodies and

machines across space.

3 GAMS' Application for Embodied Telepresent

Collaboration

As the previous section detailed,

the evolution of motion tracking technology, specifically the

system referred to as the Gesture and Media System, shifted interaction

from that of a single user interacting with sensors and computing

devices in a 2D space via ultrasonic frequencies to that of multiple

users interacting with

|