|

multiple devices and elements

in remote locations in 3D space via infrared technology. The

question posed at the beginning of this essay, "In what

ways can telepresence be enhanced by motion tracking technology

in performance?," now elicits this response: with increased

control of data objects like images, video, sound, and light

as well as hardware and equipment such as computers, robotic

lights, and projectors, by multiple users across vast distances,

almost simultaneouslyand potentially without

mediating devices, like trackers or wearable technology, and

with the human body, through movement and voice.

Such enhanced telepresence offers

much for collaborations involving digital media projects where

hardware, software, and peripherals must be controlled in real-time

by teams working together at-a-distance or where physical computing

research is undertaken. Thus, this paper concludes with a discussion

of two collaborative projects undertaken with the GAMS system:

the production of Gibson and Grigar's When Ghosts Will Die

project and their preparation for the networked version of Virtual

DJ.

3.1 The Production of When Ghosts Will Die

Inspired by the play, Copenhagen,

by Michael Frayn, tells the story about the dangers of

nuclear proliferation and is historically centered at the development

of nuclear weapons at the height of Cold War paranoia (Gibson

and Grigar). A narrative performance-installation that utilizes

multi-sensory media elements such as sound, music, voice, video,

light, and images, and text controlled by motion-tracking technology,

it unfolds in three levelsdivisions comparable to those

associated with games. (Fig. 6) As mentioned previously, the

piece constituted the first iteration of the GAMS system that

allowed for users to: 1) create behaviors for the control of

media elements like video, animation, and images via software

and the Control Centre; 2) send positional information from Flash

Track to the Control Centre and, then, onto a third computer

running Macromedia Director; and 3) allow the user to match locations

in the room with videos and images in Director and to manipulate

these images files with user movement.

Figure 6. Steve Gibson and Dene

Grigar's When Ghosts Will Die

Thus, working at-a-distance from

their spaces in Victoria and Denton, Gibson and Grigar conceptualized,

researched, wrote, produced media elements, programmed, fine

tuned, and rehearsed the piece starting December 2004 until its

debut in Dallas, TX as a one-performer "work-in-progress"

in April 2005. They continued to expand the work to its current,

finished iteration as a two-performer piece that premiered in

|

Victoria, BC, September 2005.

In less than a year and with only one brief face-to-face meeting

in Dallas for a performance, Gibson and Grigar were able to stage

a piece 25 minutes longcomprised of three levels, with

50 different room maps divided into numerous zones, and six different

media elements programmed in those zonesthat entailed

multiple computers, sensors, projectors, robotic lights, and

other peripherals.

An obvious discussion of collaboration,

then, could focus on the methods used for any one of these steps

in developing the piece; however, a more salient one relating

to this paper's topic is one that emerged during the development

of the third levelthe final part of the piece relating

to the aftermath of nuclear destructionfocusing on

the theme of the work. As mentioned, When Ghosts Will Die

was inspired by Copenhagen, and a refrain in that play

is the statement that the destruction caused by nuclear bombs

would be so complete that "even the ghosts will die"

(Frayn 79). This ghost theme was able to be represented in the

work via the GAMS technology. In brief, Grigar appears in Gibson's

space as a ghostlike figure represented only as light and sound

that he interacts with, and Gibson appears in hers in the same

way. Utilized as such, embodied telepresence can result in such

a collaboration and be incorporated thematically, becoming a

narrative element as well as a methodology.

3.2 Preparation for Virtual DJ

was originally a non-networked piece created by Gibson to explore

motion-tracking technology as an expressive tool for interaction

with sound and lights (Gibson). Later, after the development

of the GAMS system that made it possible for multiple users to

interact with one another at-a-distance, the piece was expanded

for networked performances. Gibson and Grigar staged a networked

performance of Virtual DJ in the summer 2005. Gibson,

working from his studio in Victoria, BC, moved robotic lights

and produced sound in Grigar's lab in Denton, Texas while she

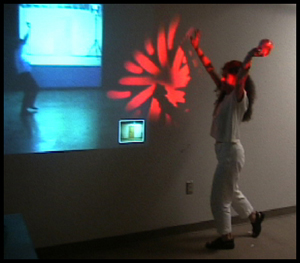

simultaneously did the same in his from her space. (Fig. 7)

Figure 7. Steve Gibson's Networked

Virtual DJ

|